CRS

Job Statuses

CREATED In the CRS system but not a candidate to run, not seen by submit-daemon yet QUEUED Submitted to the negotiator, will eventually run STAGING If inputs require staging, job will enter this stage SUBMITTED All stage-files (if any) are ready, submitted to condor IMPORTING Condor job is running, processing the input files RUNNING All input files staged to node, job executing now EXPORTING Job exes ended, files are being exported KILLED Jobs that were staging, submitted or running can be killed. Can be reset from here. HELD Jobs that get Held by Condor enter this state. Can be reset from here RETRY Jobs that had their files staged but by the time they ran their files were back on tape, will be automatically resubmitted. ERROR Jobs where something went wrong, see error message for details

DONE Jobs that have finished without error, will be cleaned up if auto_remove is set

Normal Job Progression

CREATED->QUEUED->[STAGING]->SUBMITTED->IMPORTING->RUNNING->EXPORTING->DONE

With QUEUED and STAGING being handled by the submitd, and IMPORTING through EXPORTING being in Condor.

File Status Sequences

Each file goes through a series of status sequences just like a job. Here are the possible statuses and their transitions.

Input Files

NULL Files created in this state by default REQUESTED Files that have stage requests in the HPSS Batch system STAGING Files currently being staged by HPSS, not yet ready READY Files whose stage requests have come back successfully MISS Files that were staged, but the job ran later and found them wiped from the cache IMPORTING Files that are currently being PFTP'd to the execute node DONE Files that have import successfully ERROR Something went wrong staging/importing the file, see message for details UNKNOWN Files lost/dropped by HPSS Batch system

Normal Progression

NULL->[REQUESTED->STAGING->READY]->IMPORTING->DONE

Where REQUESTED--READY are handled from within the submit-daemon only for file types that require pre-staging (HPSS)

NULL Default state, files created here FOUND Job finished executing and the output file is found in the working-directory EXPORTING Job is actively staging the file out DONE File is in its final destination

NOT_FOUND Job finished but file wasn't created by the job ERROR Something went wrong, see message for details

Normal Progression

NULL->FOUND->EXPORTING->DONE

Queue Priority

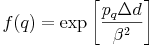

The following formula is how shares of the farm are calculated by queue priorities, where the factors for each queue q based on their priorities p_q are later normalized to 1.0

Queued Job Scaling

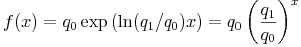

The target size of the queue of idle and staging jobs shrinks as the farm fills up. You provide two parameters in the config file, q_empty and q_full, which are percentages. These represent the total number of idle+staging jobs as a percentage of the slots available to run CRS jobs. For example, q_empty = 80, q_full = 4 would mean that with no jobs running, the number of jobs staging/submitted would be 0.8 * farm_size, and when the farm is full, 0.04 * farm_size. There is an exponential decay between these two points given by the following formula.

Where 0 < q_n < 1 (in the configs these are precentages so enter 0-100)